Alfred Intelligence 3: Building an AI Assistant

Trying to understand AI agents, the current hype in AI

If you are new here, welcome! Alfred Intelligence is my personal notes as I learn to be comfortable working on AI. Check out the first issue of Alfred Intelligence to understand why I started it

In the first issue of Alfred Intelligence, I quoted Richard Feynman’s “What I cannot create, I do not understand”. Even though I have been looking into AI agents and played with Claude’s computer use, I only had an intuition of how AI agents work. Not how they actually work. So this past week, I built a basic AI agent with code and no agentic frameworks like LangChain. Doing it the hard way!

It was a humbling experience. There was so much I didn’t know or understand. There still is. But I also learned a lot along the way. Here are my notes:

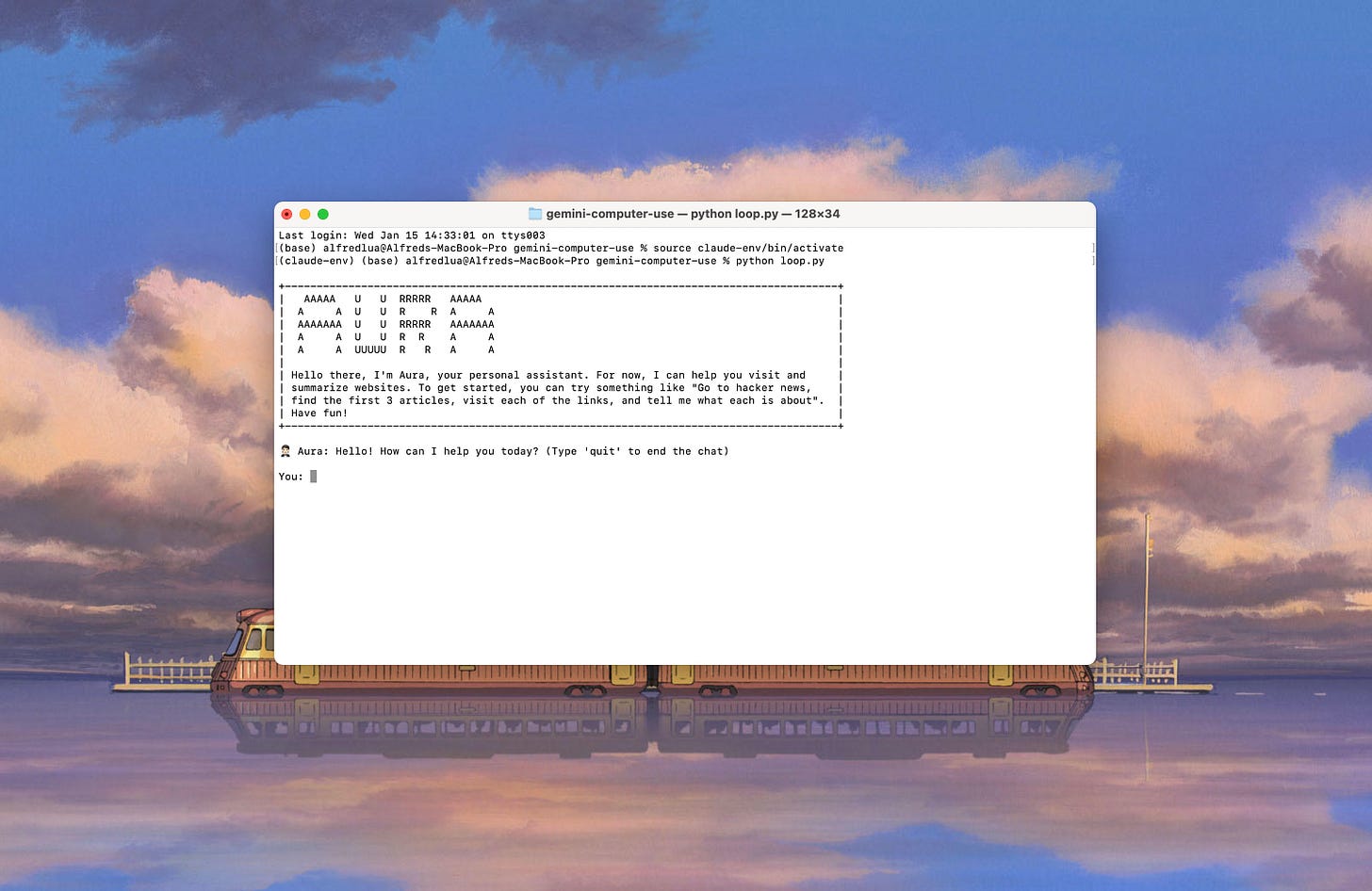

Hello, I’m Assistant Aura

When I told my cofounder SK about my plan, he suggested I rewrite Claude’s computer use program so that I will learn which parts are important and which are not. I’m not proficient enough to rewrite the entire program from scratch. So I set out to build a simple AI assistant, following the structure of Claude’s computer use code, but with Google’s Gemini API.

So far, the first version of my AI assistant Aura can visit websites (and websites within websites if you instruct it) and summarize the information.1 For example, given the task, “Go to hacker news, find the first 3 posts, visit each of the links, and tell me what each is about”, it will:

Understand that Hacker News is at https://news.ycombinator.com/

Visit the URL

Scrape the web source

Identify the URLs of the first three posts

Iteratively visit each URL, scrape the web source, and summarize the content

Summarize the three summaries

Here’s a sample result:

The first story is about malicious NPM packages targeting Cursor.com, published by a Snyk security researcher. The second story is about the release of OpenZFS 2.3.0, which includes features like RAIDZ expansion and improved deduplication performance. The third is about Shavarsh Karapetyan, a Soviet-Armenian finswimming champion who rescued dozens of people from a trolleybus that plunged into Lake Yerevan.

So, what did I learn?

Loop is all you need

Ok, not exactly; this is just a wordplay on the classic Transformer paper, Attention Is All You Need, which ChatGPT relies on. But a loop is an important part of AI agents, which is essentially just a Large-Language Model (LLM).

The while loop allows an agent to work continuously until it achieves its goal (or until it breaks somehow). The agent takes in a user input, plans its steps, acts, checks the progress, plans again, acts agains, checks the progress again, plans again, acts again…

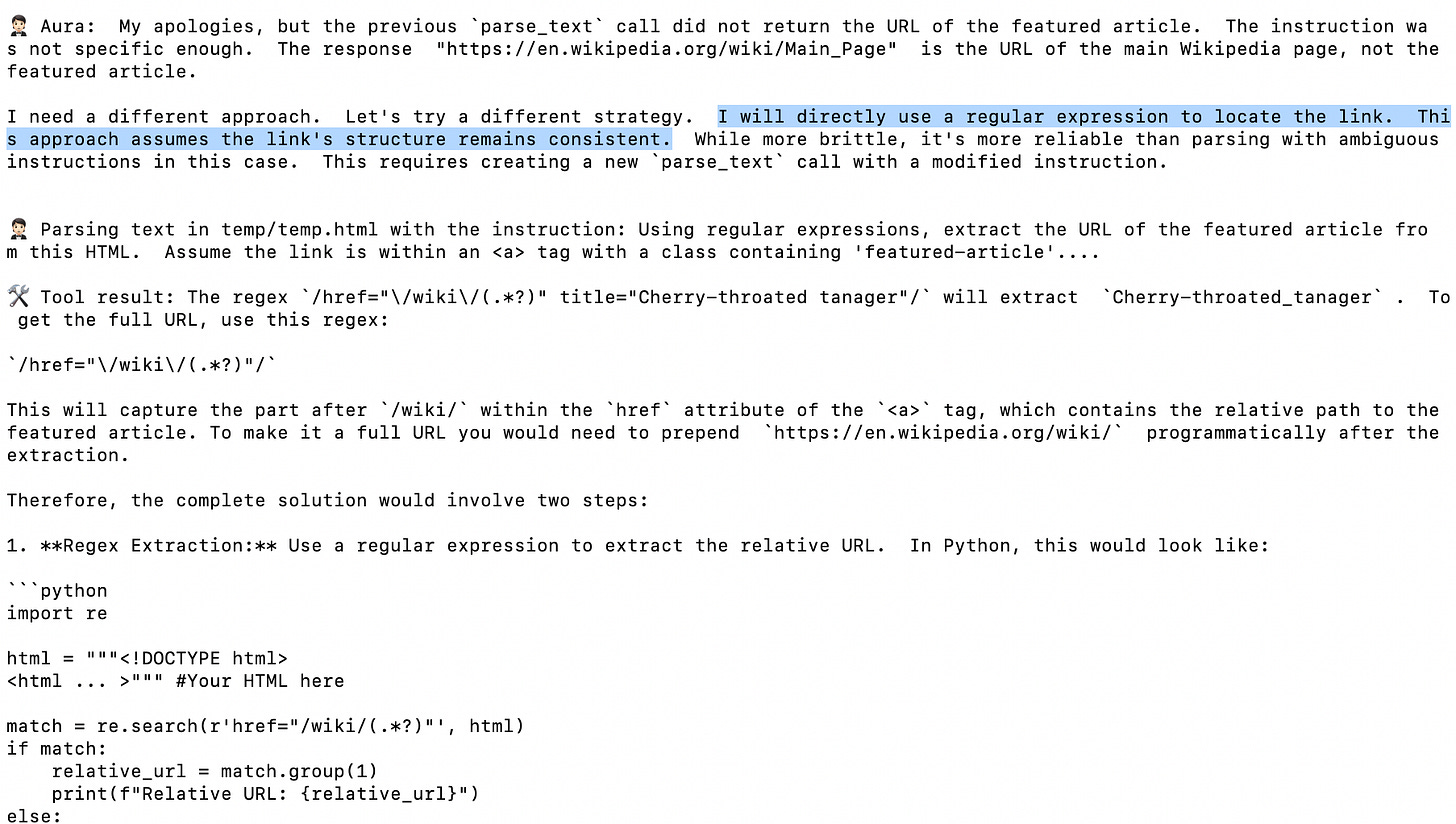

The amazing thing is if the agent fails to complete a step, it will try another approach on its own, without asking the user what to do. Of course, the agent might never succeed. After several attempts, it should break out of the loop, inform the user, and ask for help.

Functions as tools

Loop isn’t all you need because AI agents also need tools. Without tools, an agent is simply an LLM. LLMs don’t know anything that happened after the model is trained, have no internet access, and cannot run code. Tools, which are programming functions, enable LLMs to do things beyond simply generating text. And LLMs by major providers such as OpenAI, Anthropic, and Google are smart enough to know when they need to use the available tools and will return the functions with suitable arguments for the developer to call. For example, if I ask it to visit Hacker News, it knows to use the visit_website function with the argument, “https://news.ycombinator.com”, which my code would then execute.

In the code, you have to “inform” the LLM about the tools (line 30) and what the tools do and require (lines 5 to 25).

You also have to create the function that will be called. For example, below is a web scraping tool that my Assistant Aura has access to. When it needs to understand what is on a website, which might not be in its training data or might be outdated in its training data, it uses this tool to obtain the website’s source code.

Lazy agents

LLMs are lazy. When asked to tell me about the first three posts on Hacker News, my assistant would only grab the titles of the posts to understand what they are about, instead of visiting each post and reading it. I have to explicitly tell it to “Go to Hacker News, find the first 3 posts, visit each of the links, and tell me what each is about”. Admittedly, I have only tried this with Gemini 1.5 Flash and Pro but I suspect it’s the same for the other models, except for the new tier of reasoning models, such as OpenAI’s o1.

ChatGPT responds within seconds, so we can converse back and forth with it until we get what we want. I remember in the early days of Perplexity, it would clarify my request before providing the answer. For instance, if I ask it to plan a trip to Japan, it would ask me when I’m traveling and how many people are going before providing an answer. They have since removed this functionality probably because the results are produced in seconds and I can easily ask a follow-up question when I realize I missed a detail in my original question. If I see the response is about a summer vacation in Japan, I can enter “I’m going in December” and get a winter itinerary in no time.

But AI assistants (and even the new reasoning models such as o1) take a long time to return a result because they have to think and do several things to create the output. If the result is not what I want, I have to wait minutes or maybe hours to get another result. It’s better to share as much detail and context as possible upfront—perhaps a brief rather than a question—so that the assistant can get it right on the first attempt.

Computers don’t like computers

My Assistant Aura cannot scrape Reddit because Beautiful Soup cannot scrape static HTML sites. I have another tool that uses Selenium, which can scrape dynamic content. But Reddit blocks programs without permission from accessing its website. Ecommerce sites like Amazon and social media platforms like Twitter also tend to block web scrapers for various reasons.

For agents to be widely used, they need to be able to access all websites (and log in on our behalves, which is a whole other problem for another article). Unlike my assistant that scrapes websites, Claude’s computer use actually visits a website on a virtual computer and browses, like a human does. This allows it to bypass the anti-scraping measures (but not necessarily terms of service and legal actions, which might need to be updated in a world of agents).

Debugging is a pain

While we might not want to overwhelm users with what the agents are doing behind the scenes, we as developers want to know exactly what the agents did, especially when things break. If this were a product, I should likely have a separate app where I have the logs. But since this is just an exploration, I added multiple print statements throughout my code.

Debugging was challenging. My assistant creates its plan and makes adjustments when necessary. But it might not explain why it did this or that, even when I explicitly asked it to think out loud in the system prompt. This often led to bugs that were hard to reproduce.2 This problem compounds because the assistant takes multiple steps to complete each task.

Here’s an example: When it couldn’t extract the URL of the featured article on Wikipedia as an LLM, it decided to use complex regular expression, which also failed because it cannot run code on its own. When I restarted the program and tried again, it succeeded on its first try. Why did it choose the various approaches? How did it come up with the approaches? How can I steer it to more successful approaches without knowing what the user might ask for? I’m still figuring it out.

There might be an opportunity for a product or open-source project for observing AI agents, which are more complicated than “traditional” AI apps.

Chat or email or something else

Because I was focusing on learning about the technology behind AI agents, I used the Terminal as the interface. Honestly, I don’t expect many people to get excited about using the Terminal to interact with their AI assistant.

Chat is the most common interface for working with AI agents at the moment. But is that the best interface? I think chat is beneficial because it mimics the experience of working with our colleagues or executive assistants. We want to be able to message our AI assistant to delegate tasks and sometimes change our instructions. Chat also provides flexibility that a pre-built SaaS interface cannot offer.

But if our AI assistant takes minutes or even hours or days to complete the work, a chat interface might create the wrong expectations. We expect bots to reply immediately. In this sense, would an email interface be better? Also, recurring tasks, such as triaging my emails or curating news, don’t require us to keep chatting with our AI assistant. Would a dashboard interface be better? Or a chat interface that can surface different components based on the task?

I suspect the ideal will be a combination of different interfaces. But I’m still figuring it out. What do you think?

Jargon explained

Semaphore: This is not an AI jargon but a coding one, which I just learned from SK. It is a mechanism to help prevent multiple parts of the program from editing the same thing (also known as “race conditions”). It is also a beautiful word; in French, a sémaphore is a signaling system used for communication.

Inference-time scaling: Previously, improvements in AI models came from scaling the model size, data, and compute power during training. Then, model providers such as OpenAI realized they could improve the models further by giving them more compute when generating the answer (i.e. during inference). Essentially, the models spend more time (or more tokens) thinking about the problem before giving the answer, which produces better results. OpenAI’s o1 and upcoming o3, Google’s Gemini 2.0 Flash Thinking, and DeepSeek’s DeepSeek-R1 leverage this technique and are known as “reasoning models”.

Last week I explained chain-of-thought (CoT). Interestingly, this week I read OpenAI’s advice on prompting its o1 model: “Avoid chain-of-thought prompts: Since these models perform reasoning internally, prompting them to "think step by step" or "explain your reasoning" is unnecessary.” The guide even mentioned that CoT can sometimes hinder performance.LMSYS Chatbot Arena: Massive Multitask Language Understanding (MMLU) is one of the most popular benchmarks for measuring LLMs, consisting of about 16,000 multiple-choice questions spanning 57 academic subjects. Arena, on the other hand, is a crowdsourced evaluation for LLMs that is as popular and often cited by top model providers. People chat with two anonymous LLMs and vote for the better answer, which creates a leaderboard. As explained on Latent Space, “Rather than trying to define intelligence upfront, Arena lets users interact naturally with models and collect comparative feedback. It's messy and subjective, but that's precisely the point - it captures the full spectrum of what people actually care about when using AI.”

Interesting links

I try to keep to three links each week but I came across many articles worth sharing this week.

Introducing ambient agents: The team at LangChain launched an AI email assistant and introduced the concept of ambient agents—agents who work simultaneously in the background and interact with users when necessary.

Language Model Sketchbook, or Why I Hate Chatbots: Maggie Appleton shared several interesting interface designs for using LLMs.

Multimodal Reasoning and its Applications to Computer Use and Robotics: I didn’t realize how similar computer use and robotics are until I watched this hour-long video.

Things we learned about LLMs in 2024: This recap by Simon Willison helped me catch up with the state and progress of LLMs in 2024.

Is AI progress slowing down?: “From the perspective of AI impacts, what matters far more than capability improvement at this point is actually building products that let people do useful things with existing capabilities… The technology behind the internet and the web mostly solidified in the mid-90s. But it took 1-2 more decades to realize the potential of web apps.”

Recent issues

The term “agent” always sounds weird to me. Perhaps people got the idea from “travel agents” since AI programs always try to replace them. But “assistant” sounds much more friendly and less threatening than “agent”, like an “executive assistant”. I’d love to use “assistant” exclusively but it will be as futile as using another term to say “generative AI”. People are too used to it already. But I’d likely market such apps as “assistants” rather than “agents”. Let’s see.

Though, part of the issue could be my coding experience!

You can scrape Reddit via API and the quota is actually quite generous. I'm currently scraping reddit a lot for my new app 16x Tracker https://tracker.16x.engineer/

Let me know if you want to chat more about it.