AI20: Image generation still feels like gambling

Some thoughts after generating about 1,000 images in the past few weeks

In the past few weeks, as I was designing a website, I generated about 1,000 images across Sora, ChatGPT, Visual Electric, and Google Gemini.

About a year ago, my cofounder SK and I tried building an AI-first Photoshop, Vispunk. Besides text-to-image generation, it has tools for selecting parts of an image for re-generation, drawing shapes and sketches, and generating images with images, to allow you to create the composition you want.

I’m surprised how little AI image generation has changed since then.

Sure, the image models have gotten better. They can generate more realistic faces, hands, and text but still distort eyes, fingers, and small letters regularly. But more importantly, even though they can generate nicer images, getting what you want is still hard.

Image generation is still like rolling the dice.

You keep trying until you get what you want.

I was reminded of this after burning through about 500 credits on Visual Electric within a day.

Text as the only input interface is terrible.

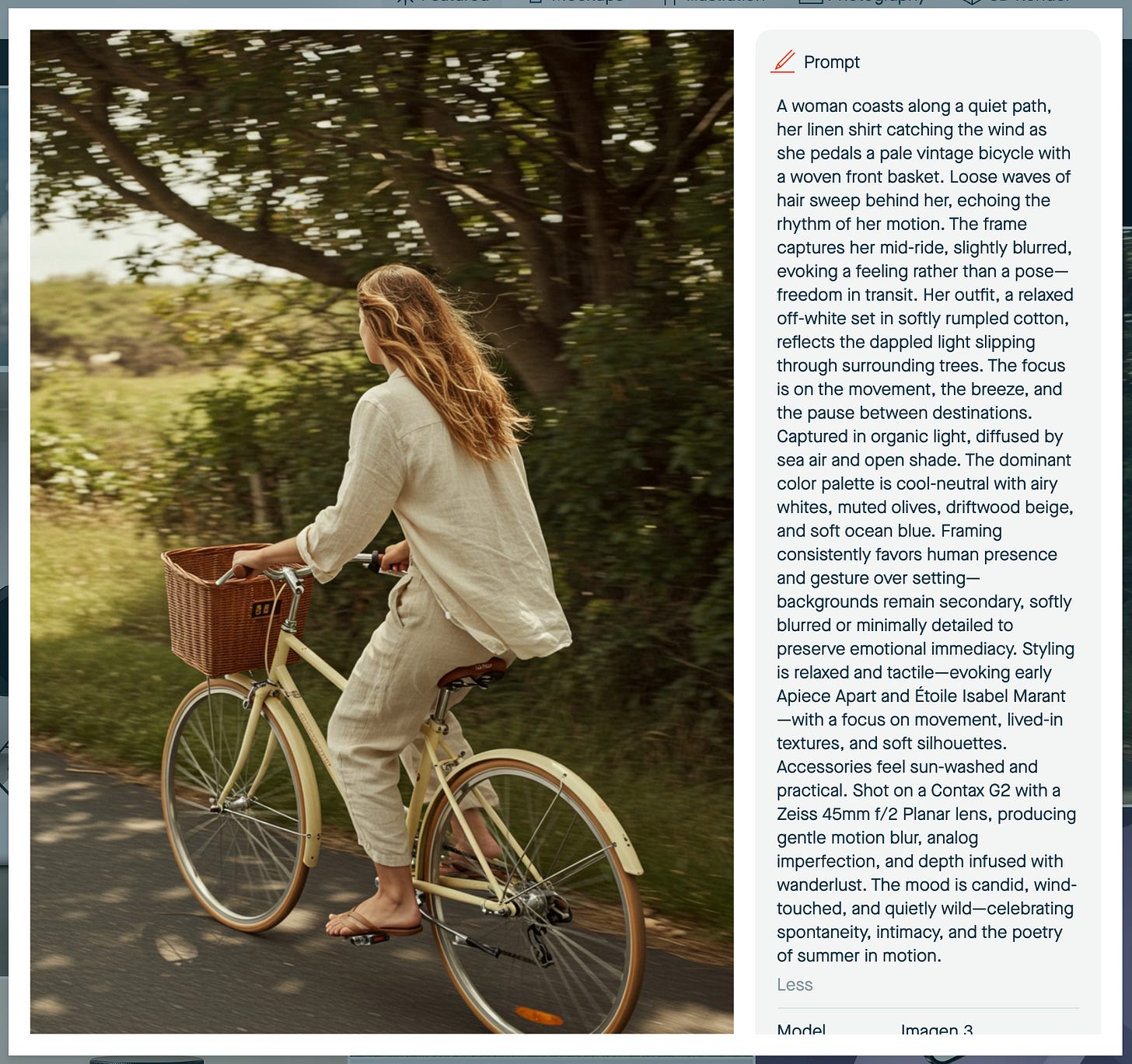

One, it’s hard to describe exactly the image we want. Often, we have to write a long paragraph to describe not just every object in the image but also the style, feeling, mood, and so on. Even then, we might not get exactly what we want. A term like “retro” can be interpreted in many different ways. Appending “Grainy analog, retro, 50mm” to the image prompt doesn’t mean the same “filter” for every image.

Two, if the generated image looks great except for one tiny part, it is impossible to pinpoint only that part and edit it with a text input. How do you describe a particular crayon in an image full of scattered crayons? What ends up happening is we would generate more images and pray one of them is better.

We built Vispunk because we thought (and still think) visual creation should be visual. Midjourney, which is notoriously known for having only a textbox in Discord, now has a visual editor.

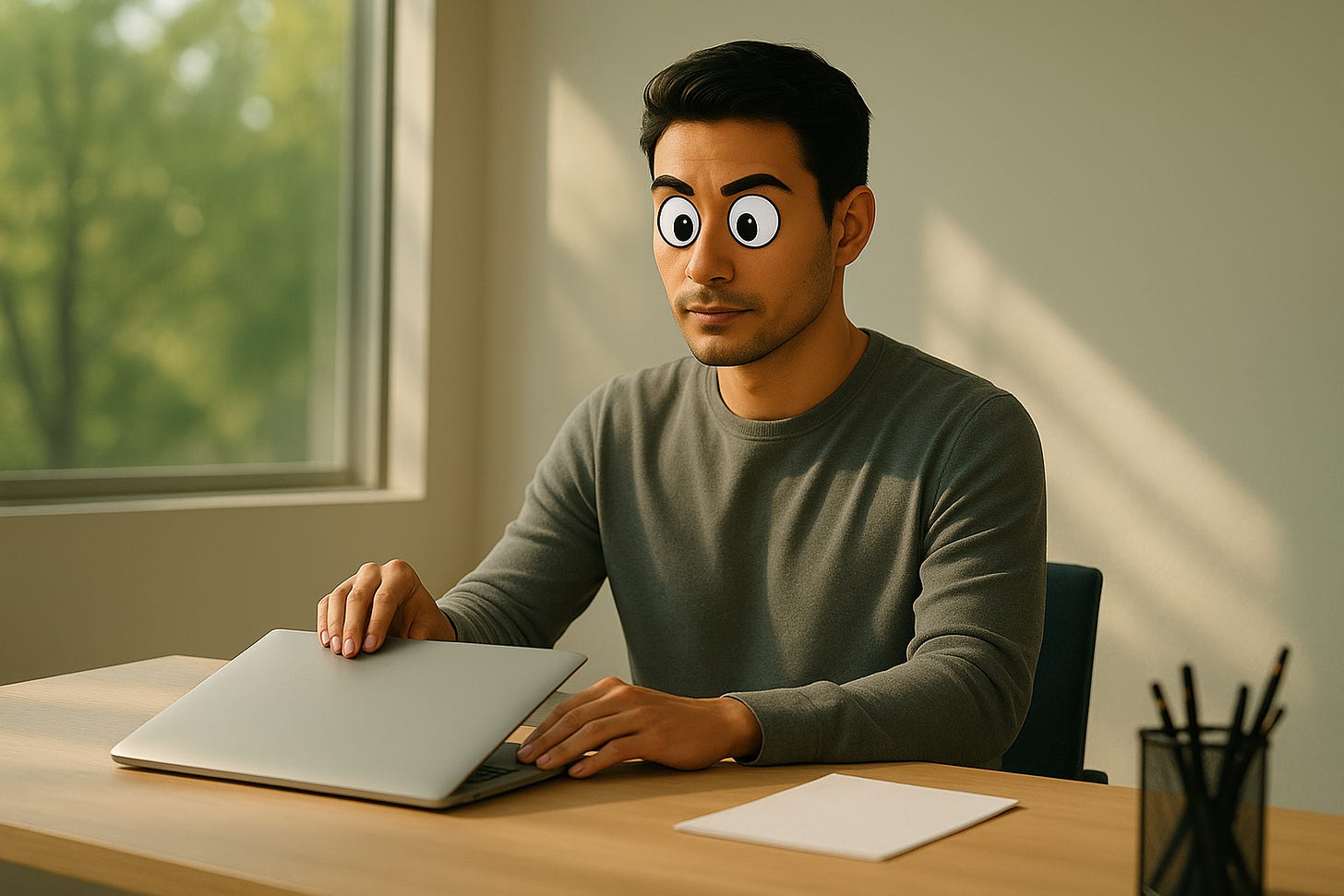

Sora allows me to select an area of a generated image and describe the changes I want. But it seems to change more than the selected area. Here’s a funny example where I selected the eyes and asked Sora to “fix the eyes”:

Given that I started this newsletter to learn more about AI, particularly AI agents, I wonder if an automatic multi-step workflow can make image generation easier.

Writing long, detailed prompts is challenging. I often look at images by other users in the galleries and edit the prompts to match what I want. An agent could chat with me back and forth to understand what I want and then create an image prompt. I guess ChatGPT does this.

For Pebblely Fashion, we added several post-processing steps to make the images, especially the faces, better, because we knew every image would have a face but it would almost always require upscaling to look more realistic. An agent could automatically pick up distorted faces or hands and fix them.

Generating one image in a preferred style is relatively easy but creating a set of images in the same style is not. Just slapping the same “filter” on different images doesn’t always give a consistent feel because the original image might already have different styles. An agent could take the original style into account and edit the images to look and feel the same aesthetically.

Whether an image is good or “correct” is subjective. Sometimes I might actually want distorted images. The recent models can probably assess images according to the image prompt like I would, identify areas for improvement, and inform me or make edits directly.

But unless the end result is exactly what I want, I would still prefer a visual way to pinpoint the parts of the image I’d like to edit.

Photoshop has many of the things I described above right. But it has such a steep learning curve. An innovative solution that I saw on X/Twitter1 is generative onboarding: You chat with an AI assistant to edit your image. As it makes the edits, the button for the respective feature is added to the user interface. The user interface won’t be overwhelming because it is empty at the start and becomes richer as you learn.

Playground had many of Vispunk’s features and more, such as creating with ControlNet. But I just noticed that they pivoted to a much, much simpler interface and experience. Perhaps they learned that the previous paradigm doesn’t work, though many of their customers seemed upset about the change.

Maybe we should bring back Vispunk someday. Let’s see.

Jargon explained

(Nothing for this week because I was mostly generating images and writing copy.)

Interesting links

Claude 4: Anthropic released Claude 4 Opus and Claude 4 Sonnet. I have yet to test them enough to have a comment but from my reading, I chanced upon Anthropic’s recommendations for managing memory, which is an important component for AI agents.

Working with LLMs: A Few Lessons: “Unlike with traditional software there is no way to get better at using AI than using AI.” and “Mostly, the way to make sure you have the skills and the people to jump into a longer-term project is to build many things. Repeatedly. Until those who would build it have enough muscle memory to be able to do more complicated projects.”

Nous Research: I love the style of the website: A simple blue and white theme, monospaced fonts, and AI images.

Recent issues

AI19: Using ChatGPT to produce creatives

I have spent the last two weeks designing landing pages for our upcoming product, an AI agent for office work.

AI18: BTS of designing websites for AI products

For the past week, I have been designing a website for our upcoming product.

AI17: Designing for humans vs AI agents

When I create an AI agent, I would usually print the LLM’s responses, tool calls, tool results, and other logs to the Terminal. This is so that I know what is happening while the agent is running.

I can’t find the tweet now, and neither could o3. I was disappointed!