Alfred Intelligence 6: AI agents should not show what they are doing

If we want AI agents to do tasks for people autonomously, we shouldn't design interfaces that make users want to sit there and watch.

If you are new here, welcome! Alfred Intelligence is where I share my personal notes as I learn to be comfortable working on AI. Check out the first issue of Alfred Intelligence to understand why I started it.

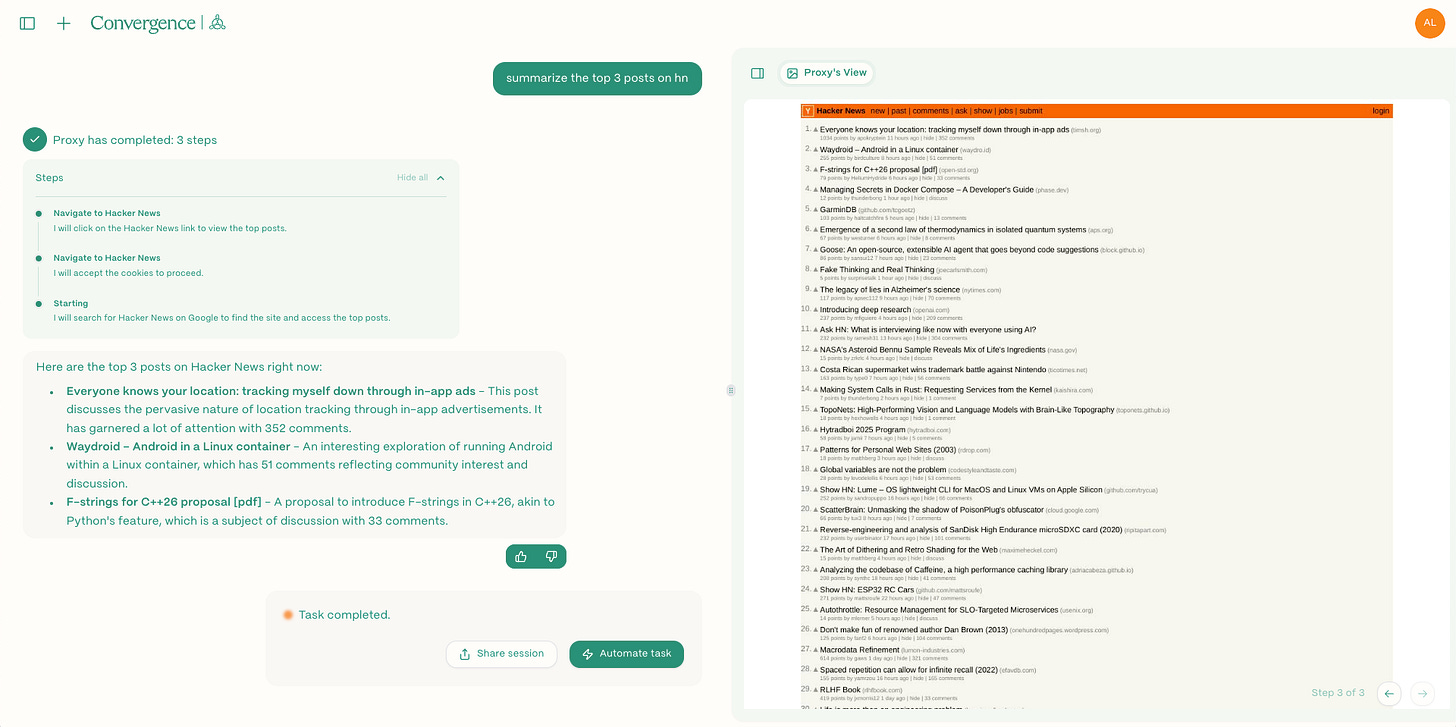

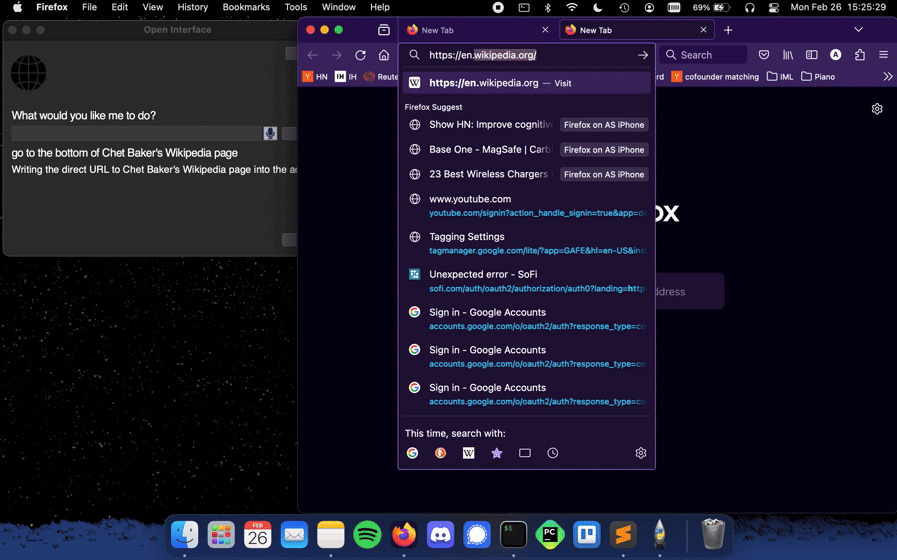

I recently looked at more than 10 general AI agent apps. Most of them, after receiving a task, will run off to complete it, stream their thoughts and actions in the chat interface or Terminal, and show the AI agent’s actions on the computer or browser in a side panel.1

Loosely speaking, all of them show what they are thinking about and working on in real-time—by default. Using the browser, clicking on a button, typing text.

But I argue they shouldn’t.

They should be working in the background, where we cannot see so that we will not be tempted to monitor them. But we can ask for updates whenever we want.

Most people would not want this for various reasons but mostly because we do not trust the agent enough and are not comfortable with it working autonomously (yet). But this creates two problems:

What happens when the AI agent browses 10 websites simultaneously to find information quickly? 50 websites? 100 websites? We being humans will not be able to keep up. Would it make sense to slow down the AI agent just so that we can supervise their actions?

More critically, if we cannot trust the AI agent without monitoring it constantly, what is the point of using an AI agent? You might argue this is required until the AI agent is good enough to complete tasks reliably and will not create real-world problems. Sure, that I agree. But why not use it for tasks that it can already complete (fairly) reliably and that it cannot create real-world problems? Despite all the fancy demos, I doubt people want to use AI agents to buy groceries or air tickets. But how about creating a personalized daily newspaper in the specific style you want? Or researching a topic and compiling the findings in Google Docs? Or triaging emails and crafting a draft response?

OpenAI’s deep research agent is a good example we can look to: It can browse multiple websites at the same time, much faster than us humans, and it focuses on creating reports, which doesn’t result in real-world problems even if the reports are incorrect.2

If AI agents shouldn’t show what they are doing, what should they even show? What would that look like? Most importantly, how would it feel? To answer these questions, I prototyped several experiences with my AI assistant. Even though most of them didn’t feel right, each helped me iterate towards a better experience.

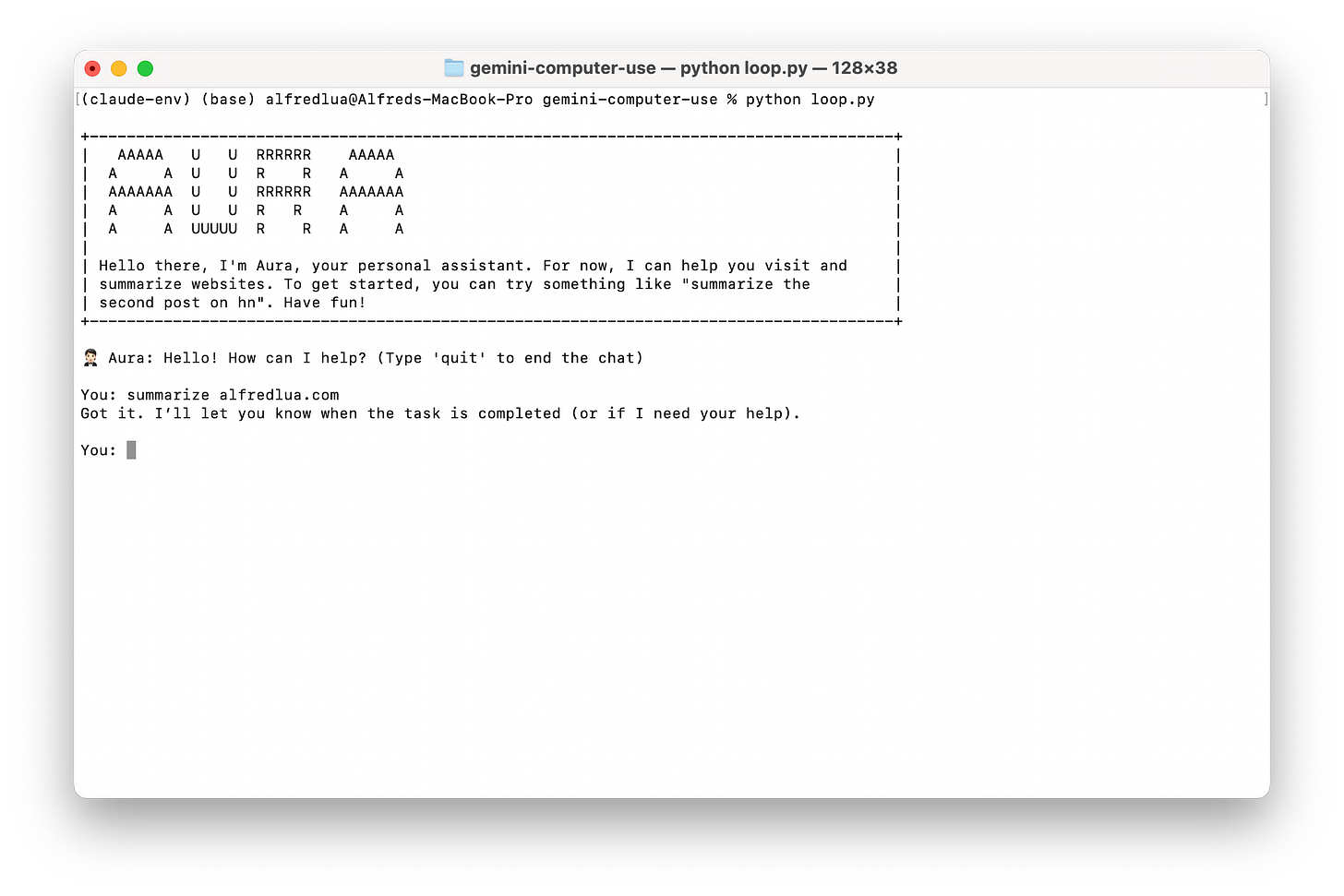

1. “I’ll let you know when I’m done” 🤔

Instead of updating the user after each step of the plan, I hid all the updates. The AI assistant will only acknowledge the task and respond with the answer when it’s done. This is similar to how we work with our colleagues.

But it felt awkward. Despite the heads-up, I felt uneasy seeing no visible progress. I know it is not my human colleague, and I expected more. Even non-AI apps will show a loading state. Is the AI assistant working? Does it know what to do? Is it stuck? How much longer will it take? Should I wait here or do my other work?

I suspect some of these questions would go away after a few attempts, when I know the AI assistant is working despite the lack of visible progress, it knows what to do at least for the past tasks, and it breaks when it is stuck.

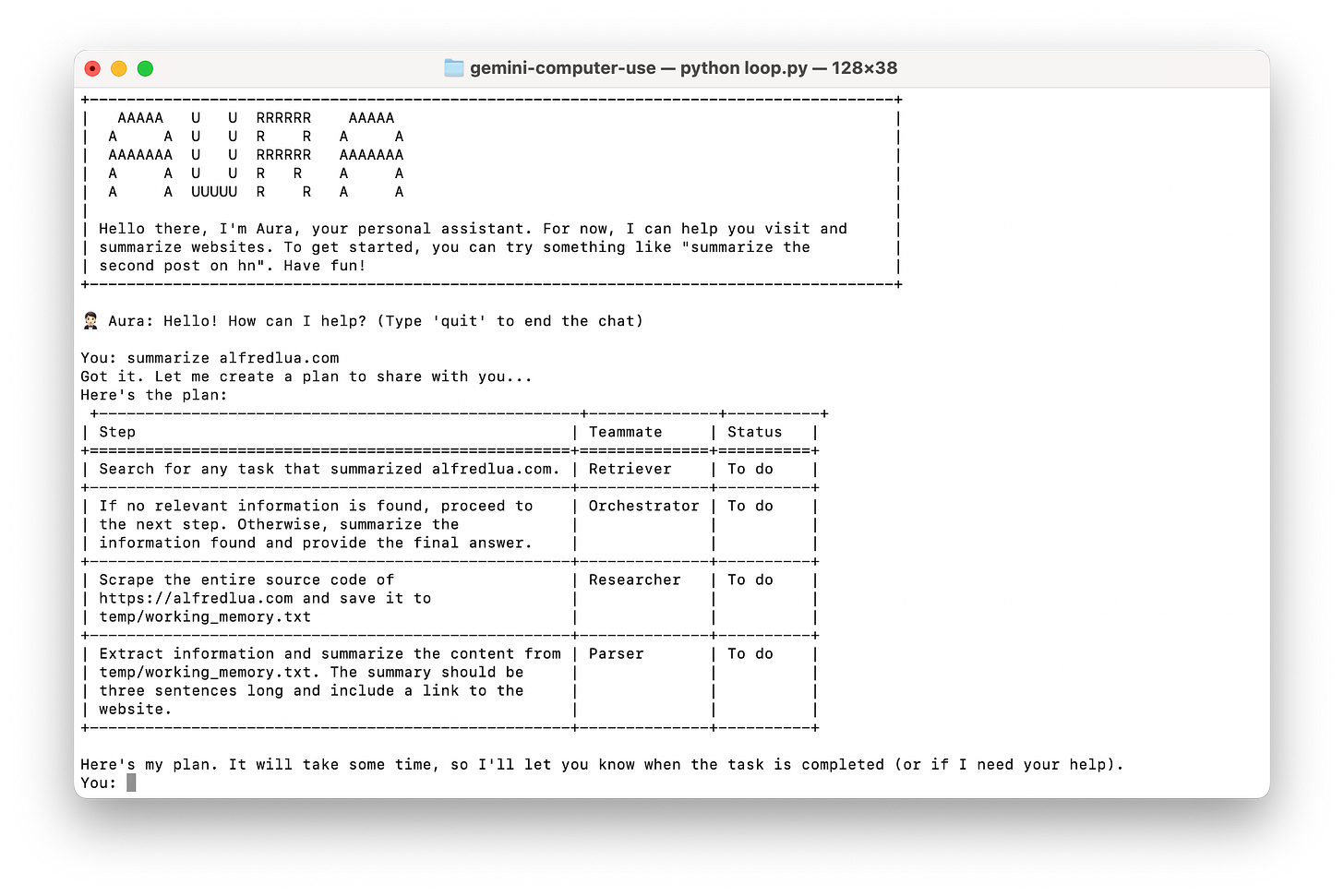

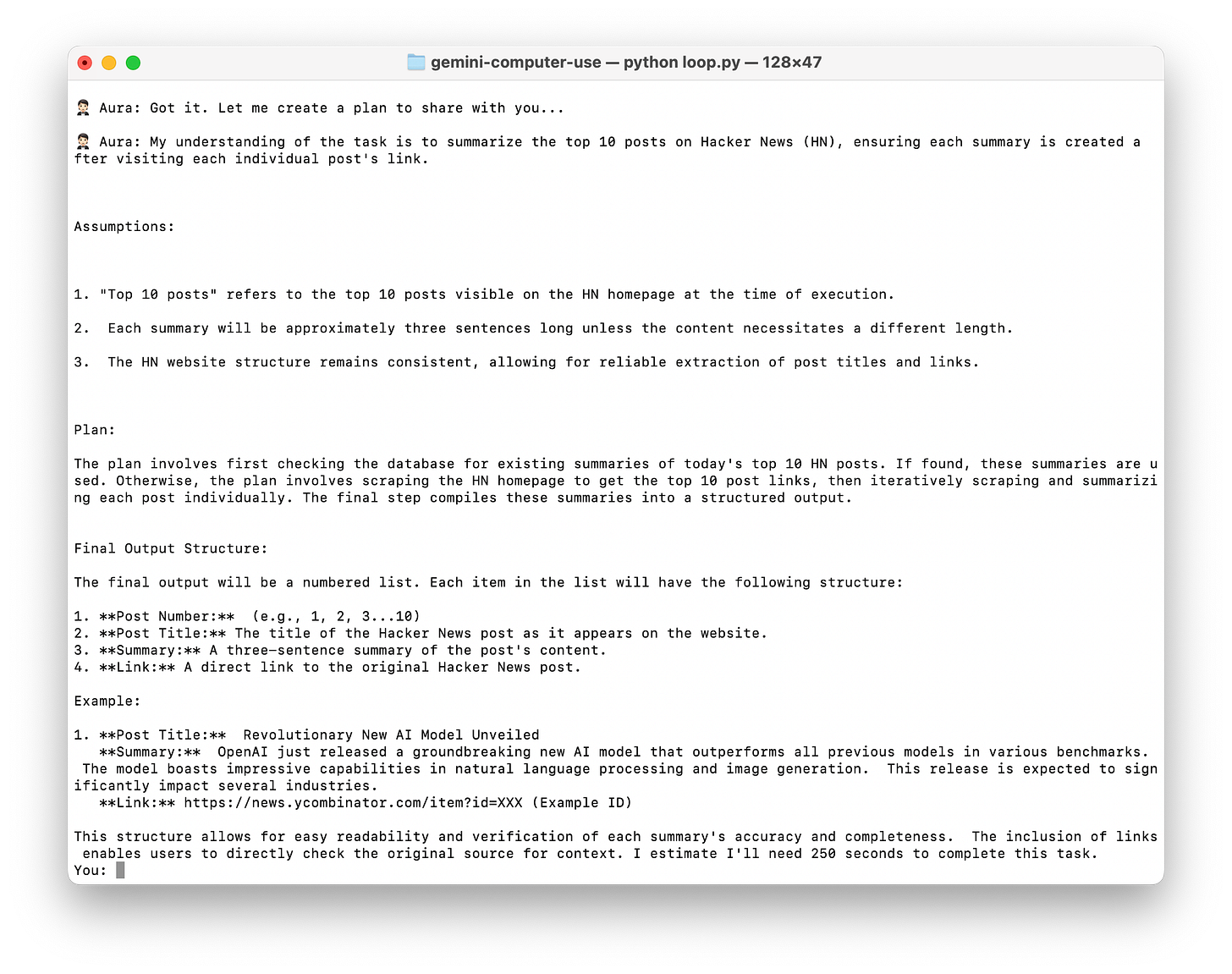

2. Show the plan ❌

To tackle the questions around whether the AI assistant knows what to do and how long it will take, I attempted to show the exact steps the AI assistant will take. I can quickly review the plan and guess the duration (3 steps vs 10 steps).

However, I realized I shouldn’t need to care about how the AI assistant will complete the task as long as it can produce the result I want. Just like showing what the AI assistant is doing in real-time, showing the plan encouraged me to supervise, which I don’t want. Also, what if there are 100 steps in the plan?

3. Show the plan + Stream one-liner updates ❌

To tackle the questions around whether the AI assistant is working or stuck, I experimented with streaming short updates.

While this kept me in the loop on the progress, it also encouraged me to monitor the updates. A workaround could be hiding these updates in a sidebar by default like OpenAI does for deep research.3 But if there were hundreds of updates, they would be useless to me because I wouldn’t be able to follow along and I probably don’t want to.

4. Update every X minutes ❌

I thought this could be an interesting way to prevent overwhelming me with updates while letting me stay updated. But it fell apart when I thought about it and before I implemented it. What if a task takes 30 seconds and another takes 60 minutes? How often should the updates be? Would it make sense to ping the user regularly? Being interrupted by a constant stream of FYI notifications while you are working doesn’t feel any better than watching the AI assistant work.

5. Pull, not push, updates 🤔

Flipping it around and letting me decide when I want updates felt better. When I was nervous about what the AI assistant was doing, I could ask for an update. When I was comfortable with it doing its thing, I could simply wait. Technically, what I did was to store the updates internally and have a separate thread from the execution thread, which I can ask for updates.4

But this is an invisible feature. I know about it because I built it. How do I ensure users know they can ask for updates? Perhaps I could try to educate users before they use the AI assistant. Perhaps it doesn’t matter: Users who don’t care about updates won’t bother; those who care would likely figure it out.

5. Show the plan summary, the expected output format, and the estimated time to completion 🤔

If I can be confident that the AI assistant knows what I want from the task, then I don’t have to care about how the AI assistant completes the task and don’t need regular updates. Getting onto the same page upfront feels crucial because the AI assistant could take minutes or hours to work on the task. I don’t want to wait 30 minutes only to get something I don’t want. I tried three things for this experiment:

My AI assistant gave a plan summary, not the detailed steps such as “click this button, type that”. This gave me enough context without overwhelming me. This is similar to Devin’s plan overview. While this information felt helpful, I’m not sure it’s necessary since I want to care only about the result, not the steps.

It described what the final output would look like. If it is not what I want, the idea is that I can ask my AI assistant to change the format. While it might work for simple text responses, it will not fit tasks that are done in other apps (e.g. tagging emails) or tasks where the format cannot be known until the research is done (e.g. writing an industry report).

It also estimated how long it would take to complete the task so that I could decide to wait there for a 30-second task or do something else for a 30-minute task. I arbitrarily multiplied the number of steps by 10 seconds, which is likely inaccurate but good enough to help me decide whether to sit here and wait or do something else?”

6. Choose your output format 🤔

Pulling the alignment thread a little more, what if I can specify my desired output format or file type via a drop-down menu or my prompt?

A short text response

A Notion document

A Google Sheet

An interactive site

Match the app interface (for tasks done on other apps)

No preference

A drop-down explicitly informs users this is possible. But coming up with a list of options upfront feels limiting if the AI assistant is meant to do a wide range of tasks. Even if I could come up with a comprehensive list, it would have hundreds of options, which feels like a terrible experience.

An alternative is to let the AI assistant infer the desired output format from the task. If the task will be done in another app, say Gmail, the expected output format could be a description of the outcome rather than a specific format:

Categories: A list of the categories I would use to triage emails (e.g., "Important", "Urgent,", "To Respond To", "To Read Later", "Junk").

Criteria: For each category, a clear explanation of the criteria used to assign emails to that category.

If the task is to create a spreadsheet, the expected output format could be the column headers and its explanation for each header. If the task is to write a research report on an industry, where even the outline cannot be known before the research, the AI assistant could ask the user if he has any outline or sections in mind or if he is happy for the AI assistant to decide. This again shows the importance of getting alignment before the AI assistant executes its plan.

However, an issue SK and I experienced letting the AI assistant determine the output format is inconsistent formats. The AI assistant will use different formats for the exact same task prompt. We could get the AI assistant to check with the user before each task but the user shouldn’t have to repeat this alignment step for every task. Naively, we could store the user’s preference with something like OpenAI’s custom instructions or let users rate responses and use the highly-rated responses to inform future tasks. We have yet to test these.

Potential key user interactions

After my experiments, these are key interactions I’d consider in an AI agent app:

Onboarding: The problem with a textbox, especially an empty one, is that users won’t know what they can do. The onboarding should start with the marketing materials, such as social media posts and website, by providing some task examples suited for the AI agent. Within the app, I feel there should be some assistance when the user is typing, similar to Google’s autocomplete, to ensure users provide enough details in their instructions. But users should not feel restricted because they might use the app in ways I didn’t think about.

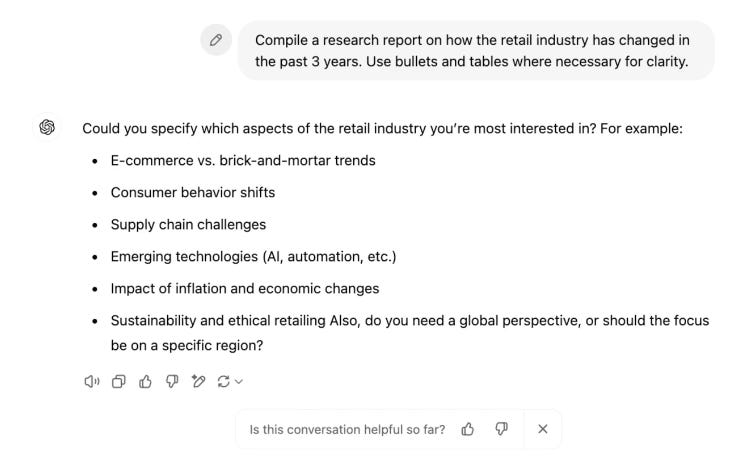

An expectation for the output: The user should at least get a sense of what the final result will be and be able to ask for adjustments if it doesn’t match their desired outcome. I played with Gemini 2.0 Flash Thinking, and it is possible to ask clarifying questions as OpenAI’s deep research agent does. While this initial phase of alignment feels critical, it also shouldn’t feel burdensome, especially if people will be using this app multiple times a day. The AI agent should learn the preferences after several tasks, though this has seemed a tough challenge to crack.

A time estimation: This does not need to be accurate to within seconds but should allow users to decide whether they should wait for the task to be done (seconds), go work on another task (minutes), or go for lunch (hours). This should be possible since the AI agent has to formulate its plan before executing it, and the estimate could be updated if there are changes to the plan. An alternative, which might be OpenAI’s approach with deep research, is to set the expectation that every task will take 5 to 30 minutes, so you should always do something else and check back later.

On-demand updates: Instead of pushing all updates to users, users should be able to fetch updates only when they want to. The updates should feel like human messages rather than computer-generated logs, like Devin’s. OpenAI has this interesting prompt in its system prompt for deep research:

Personality: v2 Over the course of the conversation, you adapt to the user’s tone and preference. You want the conversation to feel natural. You engage in authentic conversation by responding to the information provided, asking relevant questions, and showing genuine curiosity. If natural, continue the conversation with casual conversation.But…

Reliability is still key. Throughout all my tests, the main issue was when my AI assistant couldn’t complete the task. All these interaction designs are moot if the AI assistant is not reliable enough. I suspect this is why most companies are prioritizing reliability over interface and experience design.

But given how quickly the models are improving and how promising OpenAI’s o3 in deep research is, I believe most reliability issues will be resolved sooner rather than later. At that point, those who have thought about the subsequent steps—the interface and experience design—will have an advantage.

Jargon explained

Prompted baseline: I came across this in the ideal product process from OpenAI’s Karina. It means testing a model with a basic prompt to see how well it performs before trying to improve the results so that you know whether changes make results better or worse.

sys.stdout.write: I learned this command when I was trying to print messages more nicely in Terminal. It allows me to move the cursor around, such as to the beginning of a line or up to the previous line to overwrite the existing text.

Double curly braces: My AI assistant app broke when I was trying to include a JSON example in my system prompt. I learned that if I want to use curly braces in an f-string for Python, I need to use a double curly brace ({{ and }}) instead of a single curly brace ({ and }).

system_prompt = f"""

Always output a plan in the following format:

<plan>

{{

"plan": [

{{

"step": "Instruction for the first step",

"teammate": "Orchestrator, Retriever, Researcher, or Parser",

"status": "To do, Completed, or Failed"

}},

{{

"step": "Instruction for the second step",

"teammate": "Orchestrator, Retriever, Researcher, or Parser",

"status": "To do, Completed, or Failed"

}},

{{

"step": "Instruction for the third step",

"teammate": "Orchestrator, Retriever, Researcher, or Parser",

"status": "To do, Completed, or Failed"

}},

]

}}

</plan>

"""Interesting links

Multiple agents vs hallucination: “Using 3 agents with a structured review process reduced hallucination scores by [96%] across 310 test cases.” From my experiments, I also got better plans when a Plan Critic agent checks on the work of an Orchestrator agent.

A Visual Guide to Reasoning LLMs: If you are a visual learner like me, you will find this useful.

Say What You Mean: A Response to 'Let Me Speak Freely': The team at .txt showed that structured generation outperforms unstructured generation.

Doomed agent use cases: Arvind Narayanan argues that developers are focusing on the wrong tasks with their agents.

Are better models better? Benedict Evans wrote that better models that give better answers are not good enough for questions that need right answers. Even almost right is not good enough. If they can never generate the right answers, can we find use cases where “the error rate becomes a feature, not a bug”?

Recent issues

If you notice I misunderstood something, please let me know (politely). And feel free to share interesting articles and videos with me. It’ll be great to learn together!

There are some exceptions:

Instead of immediately executing the task, Devin shows a plan overview and asks for confirmation or adjustments before executing the plan. OpenAI’s deep research agent asks clarifying questions before spending five to 30 minutes researching and synthesizing information into a long-form report.

Instead of streaming all the agent’s thoughts and actions, OpenAI’s Operator hides the step-by-step updates behind a toggle, which keeps the chat history usable (unlike Claude computer use).

Instead of showing what the agent is doing on the computer or browser, OpenAI’s deep research agent shows only text updates in a sidebar (likely because it is not doing anything visually.)

Perhaps we might not consider OpenAI’s deep research agent as a general AI agent because it focuses on research. But research is a broad enough skillset that feels general enough to me.

I think it’s smart that OpenAI chose to put deep research’s updates in a sidebar, instead of showing them by default. Once people are comfortable with deep research’s output (or are bored of them), I believe they won’t check the updates anymore. We can think of this as OpenAI helping to educate the market and get people comfortable with agents working for them for long, complex tasks. The next wave of AI agents will benefit from this and can afford to show less information to their users.