Alfred Intelligence 8: Building LLM apps without manual prompting

Exploring DSPy and its practical applications

If you are new here, welcome! Alfred Intelligence is where I share my personal notes as I learn to be comfortable working on AI. Check out the first issue of Alfred Intelligence to understand why I started it.

If you notice I misunderstood something, please let me know (politely). And feel free to share interesting articles and videos with me. It’ll be great to learn together!

This week’s note is on the shorter side because my son has been sick. But I hope it is as useful!

Alfred

When building LLM apps or AI agents, I spend a lot of prompt engineering—on top of coding—just to make sure the AI functionality works. I don’t even do much optimization beyond vibe checks because evaluation is a whole other beast on its own.

So I was surprised to discover a way to prompt-engineer and optimize prompts without manual prompting. How?

DSPy.

I will not explain it thoroughly here (for that, check out this guide by Adam Łucek). I’ll give a quick explanation and then focus on exploring its practical applications.

In short, instead of writing prompts, you specify the inputs and outputs and DSPy can find the best prompt for you.

Here’s a simplified illustration:

Usually, if we want to get all the URLs on a web page, we might write a prompt such as:

You are a web scraper. You find all the URLs in a given web source. Think step by step, blah blah blah... (and more details to make sure it works well and handles all edge cases)

Here's the web source: {web_source}

The URLs are:With DSPy, we define the inputs and outputs with something as simple as:

web_source -> urls: list[str]This is essentially saying, in DSPy terms, given a web source, return a list of URLs. DSPy calls this a “signature”. We don’t have to write any prompt; DSPy will do it for us. For simple tasks, it’s just this straightforward.

DSPy also helps us parse the response so that we can simply retrieve the URLs with response.urls. I used to prompt the LLM models to return the URLs enclosed in something like <answer> and </answer> and then write regexes to extract the relevant information from the generated response. DSPy handles all that.

The benefit becomes clearer when I want to get multiple outputs, such as:

web_source -> title: str, urls: list[str], summary: strThen response.title will give me the title of the website, response.urls will give me a list of URLs on the website, and response.summary will give me a summary of the website. No more multiple <tags> and regexes to get what I need.

For complicated tasks, DSPy can help us find the best prompt by automatically testing many different prompts, much more than we can manually. There are generally two steps:

We provide 20-200 examples and a function that scores outputs. The function allows DSPy to grade the prompts and find the best-performing one.

DSPy has several tricks to optimize the prompt further, such as creating good examples to include in the prompt or even finetuning the underlying LLM.

So what can we build with it?

The two obvious applications are:

Use it to optimize our LLM app’s prompts. Most developers will use DSPy only for development and grab the optimized prompts to use in production. DSPy doesn’t provide an easy way to view the optimized prompts, so we would need to dig for them.

Build a SaaS to help other LLM app developers optimize their prompts. While coding the input-output signatures is easy, using DSPy to evaluate and further optimize prompts is non-trivial. Zenbase and LangWatch provide an easier way to use DSPy.

But what other cool things can we do with it?

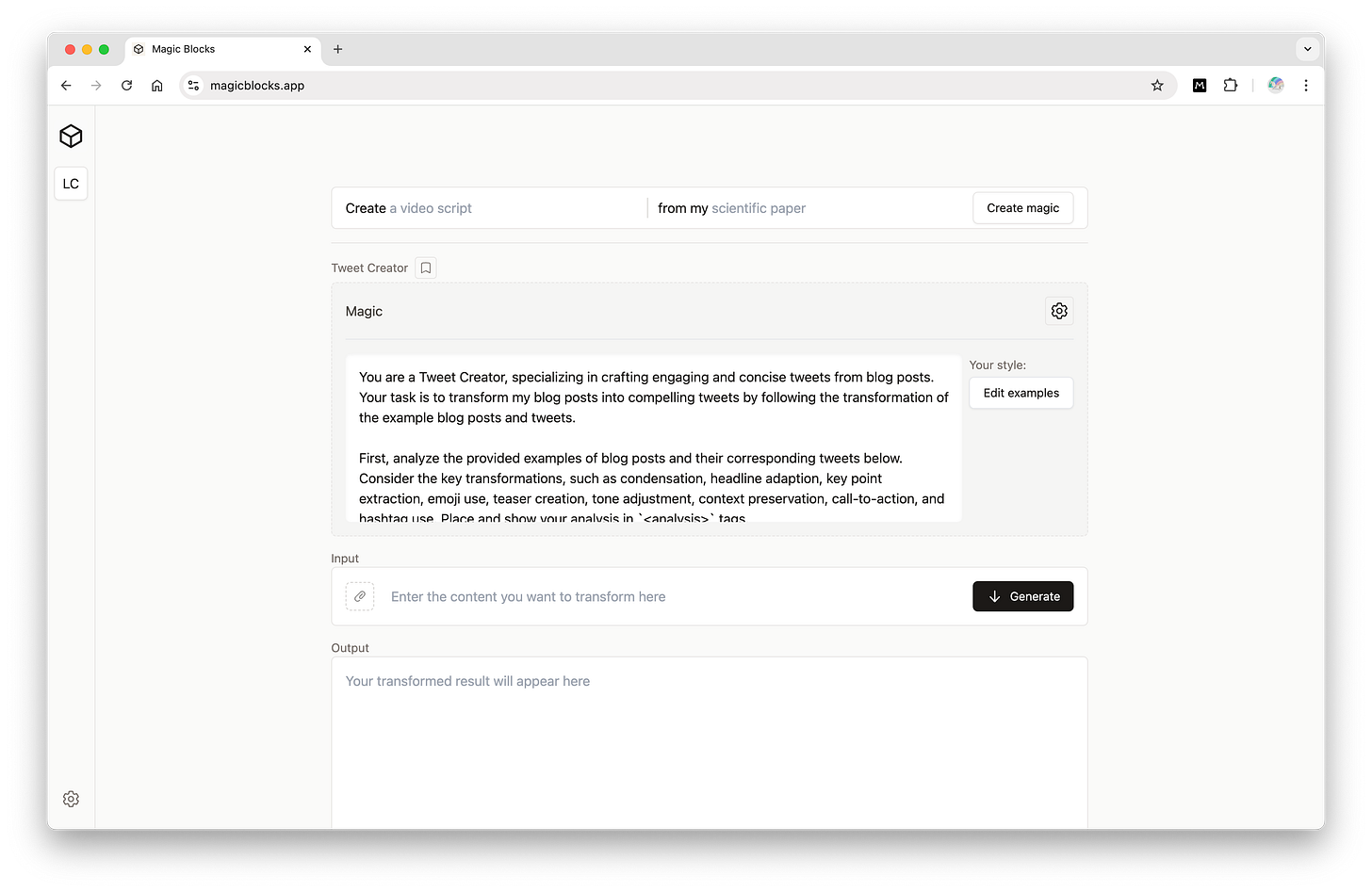

Last year, I built Magic Blocks, an LLM app that creates LLM apps. Here’s how it works: You describe what you want to do, such as create tweets from a blog post, and Magic Blocks will create a magic block (which is essentially a prompt with an input variable). You enter a blog post and get tweets.

One of the challenges was creating good enough prompts for the generated magic blocks. I tried using DSPy to create a similar app, which took significantly less time because I didn’t have to write any prompts. First, the app receives a task and generates a list of inputs and outputs required to complete the task. The required inputs become a form for the user to enter to generate the outputs.

In a way, I used DSPy to create two “prompts”:

1. task -> inputs and outputs

2. inputs -> outputsThat was quite a simple task. Can DSPy work for more complicated ones?

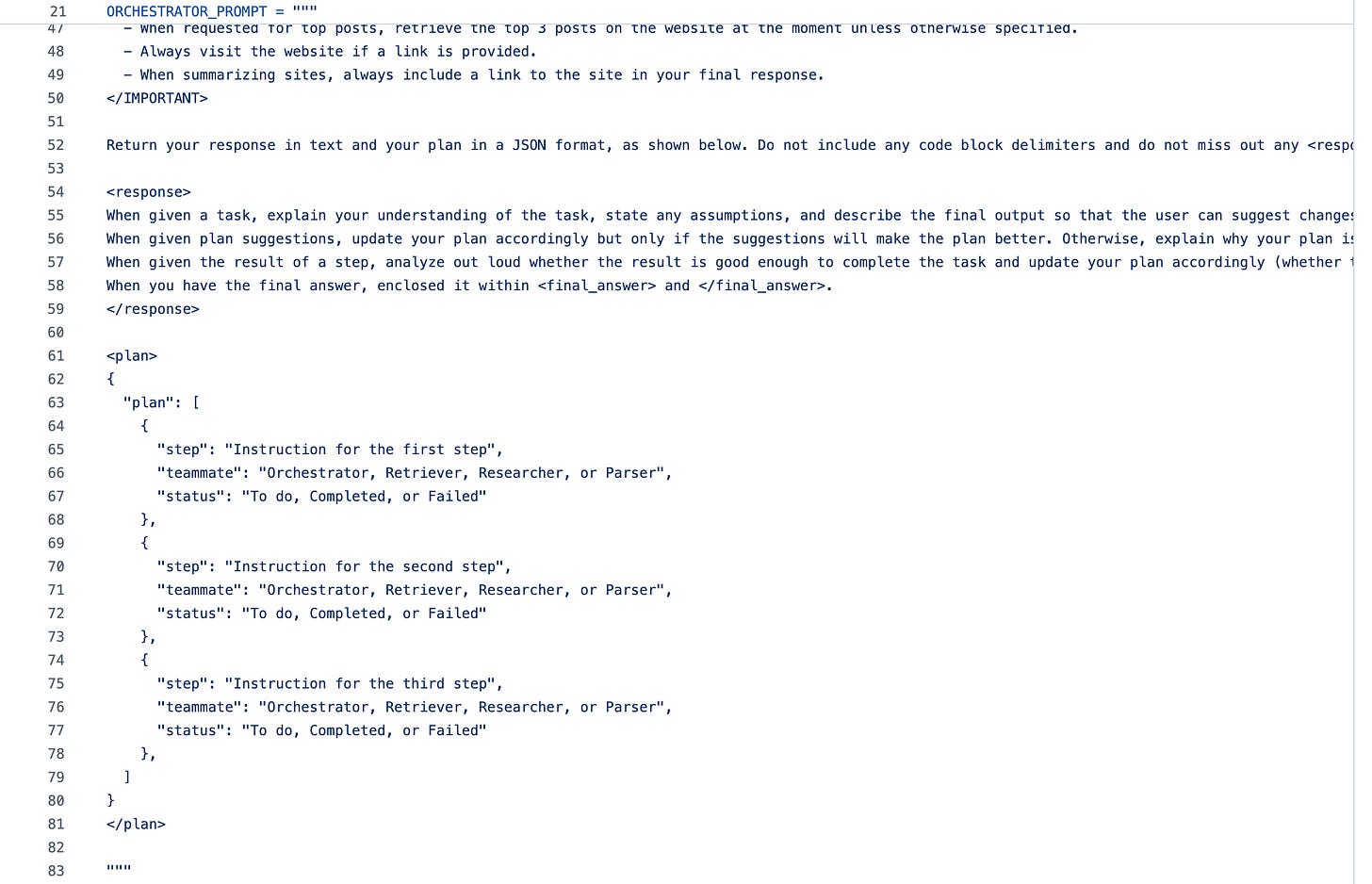

For my AI assistant, I have a pretty long prompt for generating a step-by-step plan for the team of agents to execute. I explicitly specified what the plan JSON should be like.

I wondered if DSPy can generate step-by-step plans in the same format. By adding a description of the output I want, DSPy created pretty complicated outputs:

In the code above, I’m essentially specifying the following, with a little more details on how the steps JSON should be like:

input = task

output = stepsBut I eventually learned this was an incorrect approach in DSPy’s “programming, not prompting” paradigm. Instead of prompting, the creators want us to be programming. Instead of writing prompts, we write code.

Notice how the teammates list is empty. I didn’t provide the list of teammates so the LLM didn’t know what names to list. I also didn’t specify if teammates should be a list, a dictionary, or something else. But using the description to describe what I want is prompting, not programming.

The correct approach is to create a custom class for the steps variable and use it in the signature:

class Step(BaseModel):

step_number: int

step_description: str

teammate: Literal["Orchestrator", "Researcher", "Parser"]

status: str

class Plan(dspy.Signature):

"""Generate a structured plan with steps"""

task: str = dspy.InputField(desc="The task to plan")

steps: List[Step] = dspy.OutputField(desc="A list of steps for the task")

task = "Build a web scraper that collects news headlines"

plan_generator = dspy.ChainOfThought(Plan)

plan = plan_generator(task=task)Because this is code rather than prompt, DSPy can programmatically verify if the output follows the type, List[Step], and keep iterating the prompt until it finds a prompt that produces outputs that meet the requirement. Programming, not prompting.

Besides my two experiments, I came across some ideas on Twitter:

Karthik Kalyanaraman used DSPy to extract data from PDF to structured outputs. This feels smart because if you are trying to extract many different data points, your prompt will probably be really long and you will need multiple regexes to extract them from the generated response. With DSPy, you only have to specify the outputs you want and you can get a structured output.

Amith V used DSPy in his solar power plant chat app to generate insights and graphs. He used DSPy to query data from a database and generate a final response with the data.

@ThorondorLLC used DSPy to iteratively refine an image prompt to generate the desired image. The inputs were “desired_prompt”, “current_image”, “current_prompt” while the outputs were “feedback”, “image_strictly_matches_desired_prompt”, and “revised_prompt”. By running a loop, the model revises the prompt until the generated image matches the desired prompt. Note that the “revised_prompt” isn’t optimized by DSPy directly; DSPy created a prompt to revise the image prompt.

Have you come across other interesting use cases of DSPy?

Jargon explained

The only jargon I encountered this week was DSPy, which I explained above. I was mostly ideating and prototyping this week, so I didn’t come across other jargon.

Interesting links

DeepSeek Is Chinese But Its AI Models Are From Another Planet: My 66-year-old dad, who doesn’t know how to use the internet, recently asked me about DeepSeek. DeepSeek feels like a second ChatGPT moment in the AI era. This article by Alberto Romero is a great explanation of DeepSeek, the company and its models.

OmniParser V2: Microsoft just released an update on OmniParser, a tool to help LLMs understand and “see” screenshots, which will help AI agents navigate and use the web more easily.

“Export for prompt” button: Whether we like it or not, many people and friends use ChatGPT to do work. As developers of tools, why not make it easier for them? Linear added a shortcut for copying issues as markdown so that their customers can easily paste them into LLM apps. Andrej Karpathy called this concept “Export for prompt”.

Another Wrapper: Last year, many critics looked at popular AI apps and commented that they are simply GPT wrappers. Going against the grain, Fekri thought, “Let’s make it even easier for people to create GPT wrappers!”